AI Needs Its MP3 Moment

AI Needs Its MP3 Moment

The year was 1993. A small team of scientists in Germany finalized a file format that nobody asked for. It seemed like just another technical tweak to shrink a digital file.

It was like figuring out how to make a brick, then mortar. Two simple elements. But suddenly, you aren’t building mud huts anymore. You are building skyscrapers.

In the early 2000s, I was involved in designing MP3 products and experiences. The interfaces were clunky and the storage was tiny. But even then, we knew we were holding something potent enough to challenge the status quo. MP3 did not improve the sound quality of music. Technically, it made the sound worse. It cheated. It worked by leveraging psychoacoustics, stripping away the data humans couldn’t hear anyway.

But MP3 did something more important than quality. It made music liquid.

Suddenly, a song wasn’t trapped on a plastic disc. It flowed into pockets, cars, and across bad internet connections. It transformed music from a product you bought into a utility you breathed.

But the stakes here are higher than music.

We have built tools to extend our muscles (the hammer) and our senses (the telescope). We always had to adapt to all the tools we ever made. But we have never had a tool that extends our reasoning, that adapts to us.

AI is the first machine for cognition.

But right now, that cognition is a heavy masterpiece. It lives in massive data centers. It consumes energy measured in power plants. It is as if we invented an automobile that requires a supertanker of fuel just to drive across town.

In the AI industry, there is a lot of effort to fix this with optimizations like “quantization” and “pruning.” But these are just better zip files. They are not the revolution.

The question is: Can we make reasoning light enough to travel? What is the limit to compressing knowledge?

The Physics of Knowledge

Compressing reasoning isn’t like compressing a file. It’s like dehydrating fruit. Remove the right amount of water, and you keep the flavor forever. Remove too much, and it becomes dust. To understand how to do this right, we have to look at how humans compress reality. We rely on Decoders. But a decoder is not just “context.” It is Experience.

Imagine I want to send you a recipe for a specific pizza. Watch what happens to the message as our relationship deepens.

When I write for a Stranger, I have to write the Manual, the whole thing. I list the exact temperatures, the dough hydration ratios, the chemistry of the yeast. The result is perfect, but the “file” is large. This is where AI is today: heavy, explicit, and exhaustive.

When I write for a Colleague, I switch to Shorthand. “Standard dough. High heat. Don’t burn the kajmak.” I stripped away 80% of the data because I trust your skill to fill in the blanks.

But the real magic happens when I text an Old Friend who was with me on a trip to Serbia. I type seven words:

“The kajmak pizza we had in Belgrade last week.”

Instantaneously, the smell, the crunch, the atmosphere, and the taste flood back. We achieved a perfect reconstruction of a complex reality with near-zero data transfer.

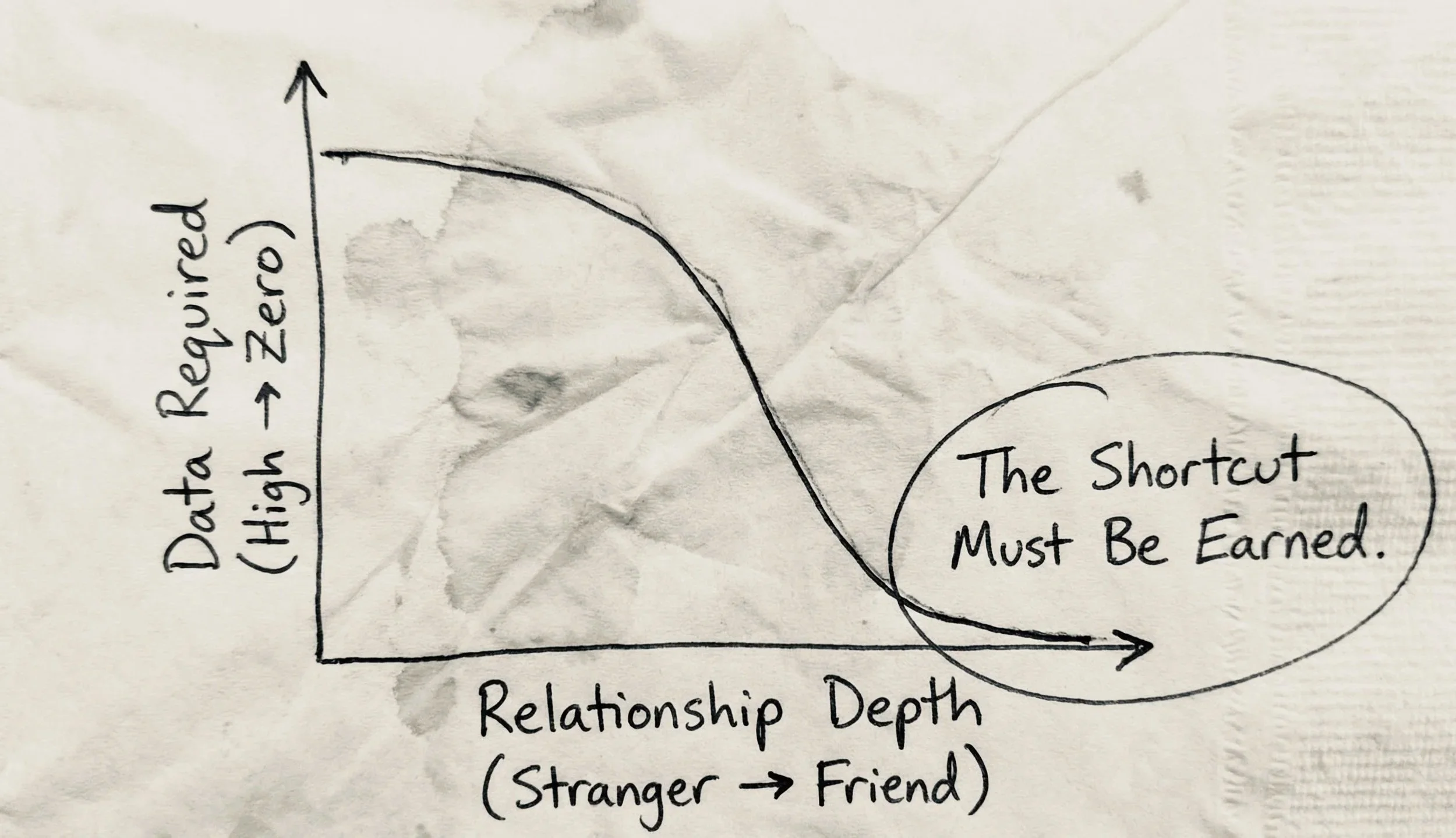

The Shortcut Must Be Earned

The message was tiny, but the result was perfect. Why? Because the recipe wasn’t in the text message. The recipe was already in the receiver’s head.

This reveals the hidden law of compression: The shortcut must be earned. You can only use the pointer, “The pizza we had last week”, because you invested the time to share a reality in the past.

In our industry, technologists call this a “Decoder.” For the purpose of this article will call it Embodied Memory.

If you have cooked pizza before, a short instruction like “hot oven” works because your hands remember. You have an internal model built from practice. If you have never cooked, that same message is useless.

This is the physics of computing.

Every extra step we cannot compress means more compute somewhere. More movement of information through chips. More energy burned.

A single text interaction can cost tenths of a watt-hour. If a knowledge compression breakthrough cuts compute per useful answer by half, that energy halves too. At scale, that is not a rounding error. It changes what is possible on today’s hardware.

The World After

When we solve this, the benefit is not just speed. It is Access. Knowledge compression is how AI becomes equal across income, geography, and devices.If an AI model is heavy, it belongs to the few. But when AI has its MP3 moment, it will run on a five-year-old mobile phone in rural Kenya, offline. That doctor doesn’t need a supercomputer. They need access to the same medical reasoning as a top research hospital, condensed and liquid, available right now.

When the cost of intelligence drops to near zero, AI stops being a product we buy. It becomes Ambient Intelligence.

AI is the sun.

Embodied Memory is the lens.

Knowledge compression decides how far the light can travel.

If this felt like signal, help it travel. brankolukic.com : copyself_xyz

#AI #GenerativeAI #Creativity #HumanCenteredDesign #DesignLeadership #FutureOfWork